In this post, we document the high level steps required to connect Oracle Business Intelligence 11.1.1.9 to Apache Spark using Spark SQL. The goal is to be able to run interactive queries in OBIEE using Spark as a data source. OBIEE 11.1.1.7 introduced the capabilities to integrate with Hadoop sources using the Hive server1 ODBC interface. A connector by Cloudera allows connecting to Hive Server 2 an improved version of Hive. Apache Spark SQL supports reading and writing data stored in Apache Hive. For this experiment, we are running OBIEE in Windows 7 and Hive, Spark in Linux Mint.

- Oracle Business Intelligence Enterprise Edition 11.1.1.9

- Cloudera ODBC Driver for Hive (v2.5.12)

Once our environment is ready, we start our Apache Spark service:

Once the service is up and running, you should be able to navigate to http://[hostname]:8080

Next, we start the Spark Thrift Server service and direct it to connect to the Spark service;

For more details about the Apache Spark Thrift Server, visit the following link.

Now that the environment is ready, let’s create a connection to Hive. This is done by creating and ODBC data source in Windows. The connection to use is the “Cloudera ODBC Driver for Apache Hive”.

To configure the ODBC connection, simply add host / port of the thrift interface, and make sure that HiveServer2 is selected. Authentication on Spark is disabled by default.

Let’s load some data and have fun! We will be using a new command shell called beeline that works with Hive Server2.

and connect to Hive

Using the beeline command shell, we load a subset of columns from the Chicago crime data set.

create table crime

(

ID STRING,

BLOCK STRING,

PRIMARY_TYPE STRING,

DESCRIPTION STRING,

LOCATIONDESC STRING,

YEAR INT,

LAT DOUBLE,

LON DOUBLE

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n'

STORED AS TEXTFILE

LOCATION '/tmp/data/crime.csv';

Now that we’ve created a data source and loaded data into hive, we need to create the metadata in in the OBIEE administration tool.

We first create a new Database (Right Click on the physical layer-> New Database)

Then a connection Pool

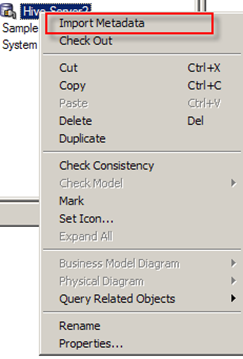

Let’s test the connection, this is done by right clicking on the connection pool and selecting import Metadata

If the connection is successful, the Import Metadata window is displayed

Click Next

For some reason, the hive tables are not listed under the “default” database; click on the shuttled icon then Finish.

The Physical catalog HIVE is created under the Hive Server2 connection pool

We need to create the “crime” table manually in OBIEE. This is done by right clicking on the “HIVE” physical catalog->New Object->Physical Table

The key thing to note is that the table name (CRIME) and the physical columns must match those in the hive database.

We then create 2 aliases then a business model in order to be able to create simple interactive reports in OBIEE Answers.

We also create a simple measure: count of ID to represent the number of crime occurrences.

We’re now ready to create a simple report in OBIEE, the first report will show the # of crime occurrences versus year (2001 through 2015). As soon as the job is submitted, we can log into Spark UI to monitor the job. Sure enough, we see one job being run

After a few seconds, the job completes

The results are displayed in OBIEE.

We then add the crime type in a pivot table

We add a tree map visualization to display the number of crime occurrences by crime type for 2015

In this post, we connected OBIEE to Hive integrated with Spark SQL; we then created analysis using the Hive-Spark data source.

obiee training in Hyderabad

ReplyDeletewe are offering best Obiee online training with work support and job assistance and high quality training facilities and well expert faculty

for other details and register your demo contact

obiee online training

Institute : Vguru Online Trainings

Contact number: +91-9885681705

Email Id: vguruonline@gmail.com

ReplyDeleteThank you for providing useful content Big data hadoop online training Hyderabad