Apache Spark is a promising open-source cluster computing framework originally developed in the AMPLab at UC Berkeley. It allows data analysts to rapidly iterate over data via various techniques such as machine learning, streaming,that require fast, in-memory data processing.

The goal of this post is show how to to get setup to run Spark locally on your machine.

One of the attractive feature about Spark is the ability to interactively perform data analysis from the command line REPL or an IDE. We’ll work with the Intellij IDEA IDE to experiment with Spark. To get started you’ll need to install the following:

- Intellij IDEA community version

- Scala

- Scala IDEA Plugin

- JDK

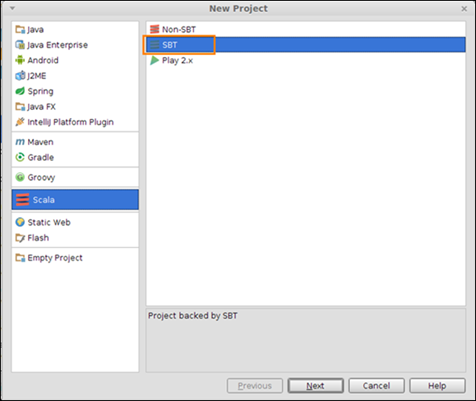

Once the software stack listed above are installed, start a new IDEA project

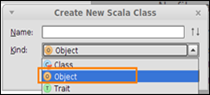

In order to create a Scala program with main, we select “Object” from the drop down

The new project workspace is displayed

Notice a file build.sbt, sbt is a dependency management similar to maven. Update your build.sbt file with:

name := "HelloWorld"

version := "1.0"

libraryDependencies += "org.apache.spark" % "spark-core_2.10" % "1.2.0"

This will cause IDEA to download all dependencies needed by the project.

Update the Scala code as follows:

/**

* Getting Started with Apache Spark

*/

import org.apache.spark.{SparkContext, SparkConf}

object HelloWorld {

val conf = new SparkConf().setAppName("HelloWorld").setMaster("local[4]")

val sc = new SparkContext(conf)

def main(args: Array[String]) {

println("Hello, world!")

}

}

The first line of the HelloWorld Object class sets common properties for your spark application, in this particular case we’re setting the application name and the number of threads (4) for our local environment. The second line creates a SparkContext which tells Spark how to access a cluster.

We’re done setting up our first Spark application, let’s run it!

Success!

No comments:

Post a Comment