- how to debug a spark program

- how load a csv file in Spark and perform a few calculations

This post assumes that you’ve got you’ve followed the previous post first steps with Apache Spark

Start a new IDEA project and create new Scala project then create a new Scala object “LoadData” on the next screen.

The data file to be loaded is a CSV file containing monthly temperature data for Tucson, AZ downloaded from the United States Historical Climatology Network (HCN). The data covers the period 1893 through December 2013, the data file format is as follows:

Station ID

|

Year

|

Month

|

Precipitation

|

Minimum Temperature

|

Average

Temperature |

Maximum

Temperature |

String

|

Integer

|

Integer

|

Double

|

Double

|

Double

|

Double

|

case class TempData(year:Int,month:Int,tMaximum:Double, tAverage:Double,tMinimum:Double)

The code for the LoadData Scala object is following

import org.apache.spark.SparkConf import org.apache.spark.SparkContext object LoadData { def main(args: Array[String]) { val conf = new SparkConf().setAppName("LoadData") .setMaster("local[4]") val sc = new SparkContext(conf) val source = sc.textFile("AZ028815_9131.csv") case class TempData(year:Int,month:Int,tMaximum:Double, tAverage:Double,tMinimum:Double) val tempData = source.filter(!_.contains(",.")).map(_.split(",")) .map(p=>TempData(p(1).toInt,p(2).toInt,p(4).toDouble,p(5).toDouble, p(6).toDouble)) }}

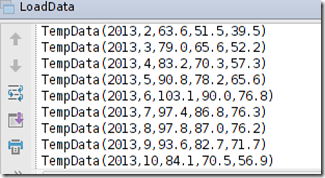

Let’s verify that the data is loaded correctly by adding a print statement:

tData.foreach( println)

No comments:

Post a Comment